Growing applications of AI techniques vs. growing concerns: how to ease the tension?

The objective of this article is to present the participative initiative "responsible and trustworthy data science" that we initiated mid-2019 and have been leading since then. I will follow the thread of the presentation I made at the "Big data & ML" meetup on September 29, 2020. I hope that this blog format will allow as many people as possible to get to know this initiative, perhaps to react to it, or maybe even to join the effort and contribute! All the feedbacks are welcome, they come to feed both the thinking process and the implementation work: we need it!

There is a growing tension between the interest in AI techniques and the concerns they generate

Interest in "AI", data science in general, has been growing for several years. Online courses and masters curriculums are multiplying, as well as software tools, publications, specialized firms, products and services: the list is endless. At the time of writing this article, this surge has not yet fully materialized in IT systems in production, but it is getting closer, and the acceleration is noticeable. No need to convince you if you have reached this article!

But where AI seems promising and generates a growing interest for many use cases, it also raises concerns, and each month that passes sees its share of failed launches and scandals of varying degrees of magnitude. The list of "Awful AI" maintained by David Dao is full of them; one that particularly struck me over the past year is the Apple Card as illustrated by the tweets below, showing that even the most advanced technological giants are not immune to these risks:

There is therefore a growing tension between the potential and interest for AI techniques on the one hand, and the difficulty to trust these techniques or their implementations on the other hand (whether by private actors like Apple in the previous example, Tesla in this example, or by public actors, cf. COMPAS on parole in the USA, the controversies every year on Parcoursup in France, unemployment benefits in the Netherlands, and many others). In this context, it becomes more and more difficult for an organization to implement data science approaches in its products and services and to assume it publicly.

Resolving this tension requires a new framework of data science practices

Obviously this tension is not new, certain risks are very real, and it seems to me that there is a general consensus that we need to create structuring and reassuring frameworks. Just type "AI and ethics" or "responsible AI" in a search engine to see the proliferation of initiatives in this area. For example, we had already end of 2019 identified 3 meta-studies on ethical and responsible AI principles:

The global landscape of AI ethics guidelines, A. Jobin, M. Ienca, E. Vayena, June 2019

A Unified Framework of Five Principles for AI in Society, L. Floridi, J. Cowls, July 2019.

The Ethics of AI Ethics: An Evaluation of Guidelines, T. Hagendorff, October 2019.

So there's a lot of stuff out there. However, when deep diving into it, we certainly surface back with a satiated intellectual curiosity (and a lot of new tabs opened in our browser...), but a little perplexed: what to do with all this, concretely?

The prevailing feeling is that it's very interesting but you don't really know what to do with it. These "frameworks", often lists of cardinal principles, do not offer a concrete, operational hook. How to position oneself? How to evaluate one's organization? What should we work on to "comply" with these principles?

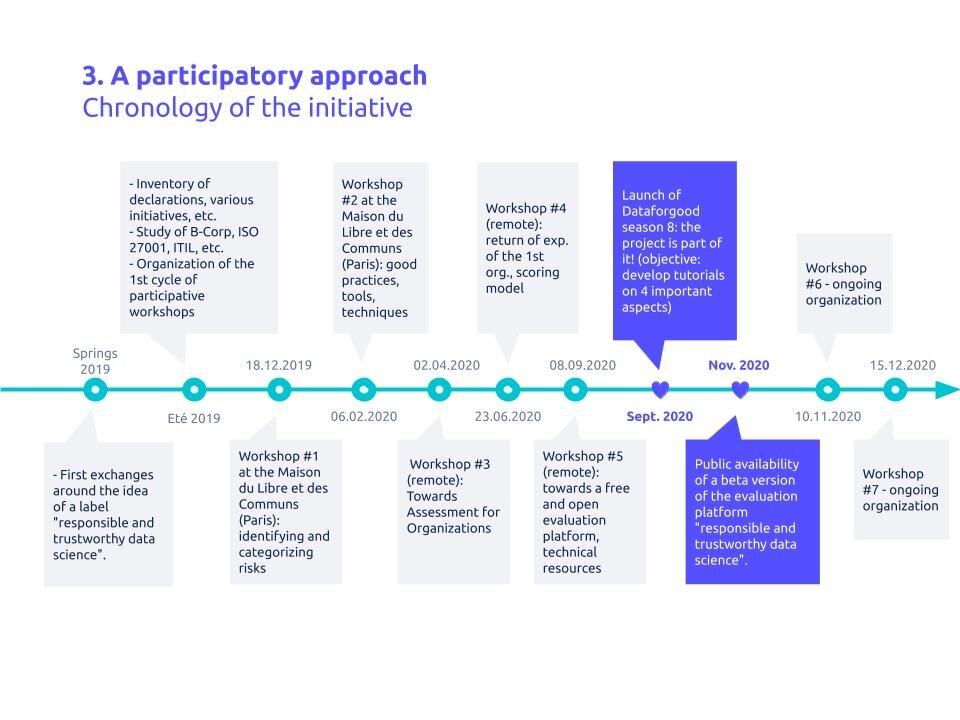

We started a participative and iterative initiative to elaborate "responsible and trustworthy data science" evaluation

It is by asking ourselves these questions and working on them early 2019 that we started picturing the interest there would be to exploring this theme and developing a tool that is intended for practitioners, useful and actionable as soon as possible. These preliminary thoughts have quickly turned into a real project, fitting well in our organisation (given Substra Foundation's raison d'être: develop collaborative, responsible and trustworthy data science!).

A first obvious observation was that a participative approach was needed: it was impossible to deal with such a vast, complex, technical subject by ourself, it would be necessary to bring together diverse skills and points of view. A second observation came naturally: it would be an iterative approach, because it seemed unimaginable to work for a period of time, publish this work and move on to something else. The field evolves quickly, the perspectives are multiple (large company, public organization, small start-up, specialized consultants, regulators, etc.), we would have to start somewhere and improve over time.

More than a year later, punctuated by five participatory workshops and countless discussions, tests with organisations, presentations, the initially indefinite concept "useful and actionable framework" has taken shape. It has become :

an evaluation of responsible and trustworthy approaches to data science;

for organizations, to assess their maturity level;

composed of around 30 assessment elements, grouped into 5 thematic sections;

which provides an output synthetic score on 100 points (theoretical maximum), knowing that as of today we consider 50/100 as a very advanced level of maturity;

which is complemented by technical resources for each evaluation point, constituting good entry points for newcomers on any given topic of the evaluation.

To give an example, the following image presents an evaluation element (the one one monitoring the performance of a model over time when it is regularly used inference purposes):

And this work continues! There are and always will be areas for improvement. This Fall 2020, we are happy and proud to be able to count on the support of the awesome Dataforgood community, which selected the initiative among the 10 projects of its 8th season.

The form, content and tone of this evaluation are the result of the participatory and iterative work we have carried out. They also owe much to several valuable sources of inspiration in this regard. If I had to mention just three of them:

B-Corp: which in just a few years has become the reference impact assessment and label for positive impact companies;

ISO 27001: the undisputed reference for management of IT systems. Although "heavy", when you roll out the 100+ assessment points in Annex A you always come out of it "organizationally strong" and having learned new things;

“Don en confiance”: a framework for charity receiving donations.

To date, the evaluation is available as structured text, distributed under an open source license on a GitHub repository from Substra Foundation (Creative Commons BY-NC-ND license). It is therefore already usable and several companies have tested it.

To make it more widely and ergonomically accessible, we are working on a digital platform, a beta version of which should be online mid-November 2020. We have also obtained support from the Ile-de-France Region and BPI France for this project, via the Innov'up innovation grant scheme.

[Update on Nov. 24th, 2020] The beta version of the web platform is now available at assessment.labelia.org. Try it out!

The objective is twofold: internally to the organizations, to improve, and externally, to reassure its different stakeholders

The primary objective, guided by this core idea of quickly offering a useful and actionable tool, is to enable interested organizations to carry out some internal work on responsible and trustworthy data science. As one makes progress through the evaluation and its various sections, one inevitably comes up against a subject he hasn’t yet mastered, which would be interesting to study in order to reach a higher level of maturity, and so on.

But one may also want to go further, and the question came up several times during workshops or presentations: when one’s evaluation is rather good and rewarding, can one talk about it to its stakeholders (e.g. partners, clients, candidates, etc.)? How can one report on it?

We make external communication a secondary objective, yet to come because it requires quite some preparatory work. Indeed, the evaluation is proposed as a self-service tool, a self-assessment. If we want to give it value, to build trust in what it represents, clear rules must be sest to avoid abuses that would completely undermine the initiative. Drawing on the reference assessment frameworks mentioned above, one thinks in particular of:

Define a threshold score, from which it is considered that the organization that achieves it has a good level of maturity on responsible and trustworthy data science;

Certify the self-assessments of the organizations that wish to do so by carrying out a mini-audit (e.g. on a few assessment points randomly sampled);

Provide certified organizations with a communication kit (logo, explanation sheet, etc.), maintain a public list of certified organizations (to prevent usurpation).

Towards a responsible AI professional ecosystem

As we have seen, the theme is very topical and initiatives flourish. If we want to resolve this tension between the potential of AI techniques and the concerns they raise, I am convinced that reference frameworks will have to emerge for responsible and trustworthy practices of data science and AI. Naturally, the organizations most inclined to embrace such approaches will stand out from others, and thus form a specific ecosystem.

Our challenge, our ambition with this initiative, is to contribute to the emergence of an ecosystem of "responsible and trustworthy data science" players, a real community that shares and makes progress together (e.g. events and professional training, positions on regulatory issues under debate, monitoring, job ads, etc.).

What don’t you join the effort?

Many thanks to all those who have contributed and are contributing to this initiative through their proposals, questions, feedback and remarks, or simply by attending the workshops. Without any order: Anne-Sophie C., Cyril P., Jeverson M., Annass M., Mouad F., Romain B., Romain G., Mathieu G., Véronique B., Paul D., Raphaelle B., Augustin D., Annabelle B., Timothé D., Elmahdi K., Eric D., Anasse B., Céline J., Jérôme C., Amine S., Jeremie A., Benoît A., Grégoire M., Grégory C., Cédric M., Timothée F., Jean H., Sophie L., Vincent Q., Nicolas S., Marie L., Soumia G., Clément M., Nathanaël C., Fabien G., Nicolas L. Lamine D., Arthur P., François C. I certainly forget some!

If you are interested, I invite you to :

Join and star the GitHub repository;

Join our Slack workspace and the #workgroup-assessment-dsrc channel ;

Subscribe to the Substra Foundation mailing-list to receive information on upcoming workshops.

You can also contact me directly by email at eric at labelia point org.